Screen reader guide for Windows, macOS, iOS, and Android:

Setup steps, essential shortcuts, and web browsing tips.

What is how to use screen reader and why it matters

Learning how to use screen reader means understanding software that speaks or brailles the content on screen so information becomes perceivable without sight.

A screen reader converts text, structure, and interface states into speech or refreshable braille while exposing navigation commands that move focus efficiently.

This access model benefits blind and low-vision people first, and it also supports eyes-busy and hands-busy scenarios such as driving or cooking.

Mastery of how to use screen reader improves independence, study, work, and everyday communication across devices and apps.

Clear mental models, consistent shortcuts, and clean page structure make the difference between frustrating experiences and fluent interaction.

Ethical and inclusive design from authors and developers further amplifies what skilled screen reader users can accomplish daily.

How to use screen reader: core concepts that unlock fluency

A screen reader presents two layers of navigation, namely focus navigation for interactive controls and browse or reading navigation for content.

Virtual cursor or reading modes let users move by paragraphs, headings, links, landmarks, tables, and form fields with single keystrokes or gestures.

Announcements communicate roles, names, states, values, and hints so each control becomes understandable and operable without sight.

Speech settings control rate, pitch, volume, punctuation, and verbosity, while braille settings control translation tables, grade, and display options.

Consistent modifier keys like NVDA, JAWS, Narrator, or VoiceOver modifiers anchor shortcut chords that work across apps.

Practice in safe sandboxes accelerates learning because muscle memory forms faster when consequences are low and feedback is immediate.

How to use screen reader: vocabulary that appears often

“Focus” indicates the active control that receives input, while “caret” refers to the text insertion point.

“Landmarks” group page regions such as navigation, main, search, and footer, enabling quick jumps.

“ARIA” supplies semantic labels and roles to complex widgets so assistive technologies can expose them accurately.

How to use screen reader on Windows (Narrator and NVDA)

Windows ships with Narrator, and the community favorite NVDA provides a powerful free alternative with frequent updates.

Setup typically involves installing NVDA, choosing a synthesizer, setting speech rate, and enabling auto-start as needed.

Essential shortcuts include NVDA+F7 for the elements list, H and Shift+H to move by headings, K and Shift+K for links, and D for landmarks.

Narrator offers similar concepts with Narrator key commands, including Narrator+Ctrl+Enter for scan mode and Narrator+S for verbosity cycles.

Reading commands like NVDA+DownArrow read from the current point, while quick nav keys traverse structures faster than Tab alone.

Web browsers such as Chrome, Edge, and Firefox pair well with NVDA, and caret browsing can be toggled for text-style navigation.

How to use screen reader: Windows form interaction basics

Forms work best in focus mode where arrow keys type instead of navigating.

NVDA toggles browse and focus with NVDA+Space, announces role and state changes, and supports labels and descriptions exposed by ARIA.

Error messages linked via aria-describedby become discoverable through the elements list or object navigation when forms validate input.

How to use screen reader on macOS (VoiceOver)

VoiceOver is built into macOS and follows a rotor-based model that filters navigation targets like headings, links, form controls, and landmarks.

Initial setup includes opening VoiceOver Utility, selecting a voice, tuning rate and verbosity, and enabling activities that switch profiles per app.

Core commands rely on Control+Option (VO) as the modifier, such as VO+Right/Left to move, VO+U to open the rotor, and VO+A to read from here.

Safari integrates well with VoiceOver, and Quick Nav simplifies arrow-key navigation without holding modifiers.

The caption panel and braille viewer help sighted collaborators understand what VoiceOver announces during training or testing.

Trackpad Commander enables touch gestures on Mac trackpads that mirror iOS patterns for those who prefer gesture-first workflows.

How to use screen reader: macOS tables, lists, and groups

VO interacts with grouped widgets via VO+Shift+Down/Up Arrow to enter and exit, keeping context clear.

Tables expose row and column headers, and VO+Cmd+F9/F10 can jump among them when authors use proper semantics.

Lists announce item counts, positions, and selection states so orientation never depends on sight alone.

How to use screen reader on iOS (VoiceOver for iPhone and iPad)

VoiceOver on iOS turns touch into accessible exploration where a single finger reads items and a double-tap activates the element under the VoiceOver cursor.

The rotor gesture, namely two-finger rotate on the screen, selects navigation units such as headings, links, form controls, and characters or words.

Two-finger swipe down reads from the current item, while three-finger gestures scroll content without activating controls.

Typing strategies include touch typing or standard typing, and dictation complements keyboard entry for longer fields.

Image descriptions and subject detection help summarize unlabeled visuals when developers have not supplied alt text.

Braille Screen Input enables six-dot entry directly on glass, and external displays pair for refreshable braille access on the go.

How to use screen reader: iOS form and app patterns

Proper labels, hints, and traits let VoiceOver announce purpose and state of switches, pickers, and sliders.

Actions rotor exposes custom actions like “Delete” or “Archive” in list items, replacing hidden swipe-only gestures with explicit operability.

Sound and haptic feedback confirm actions, while audio ducking adjusts background sound during speech for clarity.

How to use screen reader on Android (TalkBack)

TalkBack provides gesture-driven exploration similar to VoiceOver with two-finger scroll, double-tap to activate, and a global swipe-right/left to move focus.

The reading controls menu selects units such as headings, links, controls, and custom granularities like words or lines.

Braille support arrives through Braille Display or Braille Keyboard, and verbosity can be tuned per app through TalkBack settings.

Reading controls can be assigned to volume keys for one-hand operation that feels efficient on larger devices.

Select-to-Speak offers a complementary mode where highlighted content is read aloud without full screen reader control.

Developers can inspect accessibility info with “Accessibility Scanner” to identify missing labels and low contrast.

How to use screen reader: Android text editing and selections

TalkBack exposes selection controls through the reading menu so text can be selected by character, word, or line.

Clipboard actions appear in context, and on-screen keyboards announce predictions and corrections with clarity when verbosity is tuned well.

Granular navigation proves essential for correcting typos and managing long messages or document edits.

How to use screen reader on the web: structure first, then interaction

Effective browsing starts by jumping through landmarks to reach main content and skipping repeated navigation.

Headings provide the document outline so H and Shift+H move quickly, while numbers 1–6 jump to specific heading levels.

The elements list reveals links, form fields, buttons, and regions in a searchable view that shortens long pages.

ARIA landmarks such as banner, navigation, main, complementary, and contentinfo make orientation immediate on complex layouts.

Descriptive link text prevents ambiguity, and skip links allow instant access to primary content without tabbing through menus.

Keyboard-operable interfaces, visible focus indicators, and logical tab order complete the foundation for smooth exploration.

How to use screen reader: forms, dialogs, and dynamic content on the web

Labels connected to inputs by “for” and “id” make purpose understandable, while aria-describedby attaches help and error details.

Proper dialog roles, focus trapping, and aria-modal keep context contained and prevent background confusion.

Live regions announce updates without stealing focus, which is vital for notifications, search suggestions, and chat messages.

How to use screen reader: speech, braille, and verbosity tuning

Speech rate should be high enough for efficiency yet intelligible for comprehension, and punctuation levels should match the task.

Verbosity profiles help reading dense code or math differently from casual articles or chats.

Braille users often prefer contracted braille for speed and computer braille for paths and passwords.

Earcons and progress tones communicate loading, errors, and state changes without interrupting speech.

Personal dictionaries correct mispronunciations for names and domain-specific terms to maintain professionalism.

Activity-based profiles switch settings automatically when specific apps open so cognitive load stays low.

How to use screen reader: hardware and layout considerations

A full-size keyboard with well-spaced keys reduces fatigue for long sessions.

External numpads and function rows map nicely to screen reader commands for power users.

Ergonomic chairs, wrist rests, and proper desk heights prevent strain during extended reading and editing.

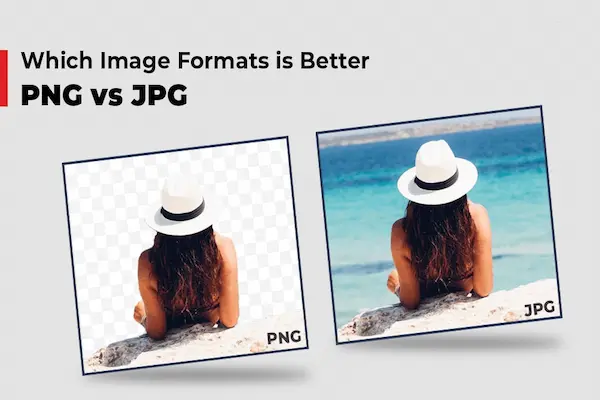

Documents, PDFs, and media

Well-tagged PDFs expose headings, lists, tables, and alt text so reading order matches visual layout.

Office formats such as DOCX and PPTX remain accessible when authors use built-in styles, true lists, and table headers.

Captioned video and transcripts make media searchable and understandable without fighting the time slider.

Math content becomes accessible through MathML or generated speech rules, avoiding flat images of equations.

E-book formats with proper navigation landmarks and page numbers empower long-form reading without losing place.

Cloud editors should announce collaboration presence, comments, and suggestion changes through ARIA-aware widgets.

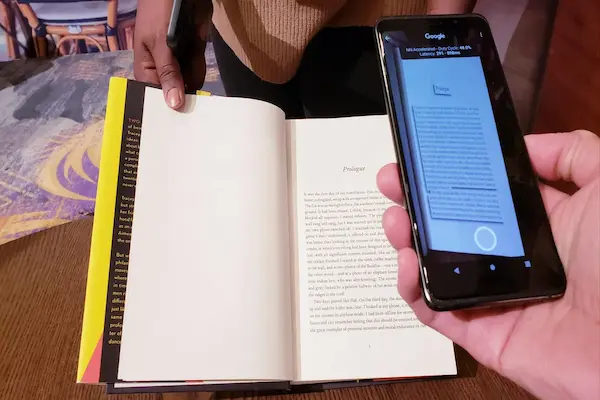

How to use screen reader: exporting and scanning workflows

Accessible exports retain tags, bookmarks, and alt text, while flat exports lose structure and slow navigation.

OCR tools can lift text from scans, but proper tagging remains the gold standard for reliable reading order and search.

How to use screen reader: common mistakes and quick fixes

Unlabeled buttons, vague links like “click here,” and empty headings slow every task and erode trust.

Keyboard traps prevent exit from widgets, and non-semantic div soup hides structure that screen readers rely on.

Custom controls without roles, names, or states force guesswork where clarity should be automatic.

Color-only cues and low contrast hide meaning from many people, not just screen reader users.

Complex tables without headers leave relationships ambiguous and break comprehension.

Quick audits with automated tools plus manual checks fix the bulk of issues before content ships.

How to use screen reader: practical troubleshooting steps

If speech falls silent, checking audio output, mute keys, and screen reader on/off states usually resolves the issue.

If navigation feels stuck, toggling browse versus focus mode or reloading virtual buffers restores movement.

If a page seems empty, headings and landmarks lists often reveal hidden structure and safe jump targets.

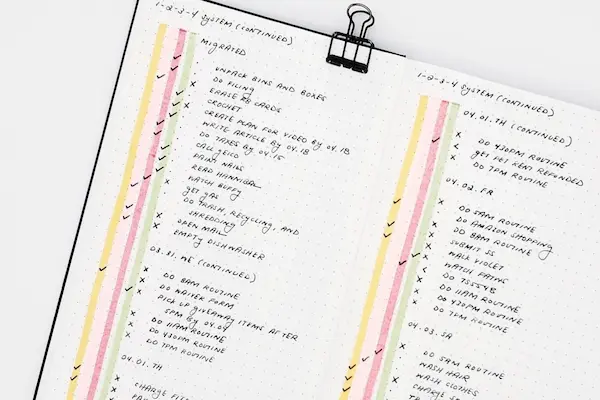

Learning plan and practice routines

Short daily drills build muscle memory faster than occasional long sessions.

A personal cheat sheet of five to ten highest-value commands keeps focus on actions with the biggest payoff.

Sandbox sites with clear structure, such as documentation portals or news pages, make early practice encouraging.

Mentoring circles and user groups share tips, voices, scripts, and add-ons that match specific professions.

Periodic device-wide reviews refine speech and braille settings as skills grow and tasks change during the year.

Confidence compounds when successes are logged, shortcuts are refined, and friction is documented for future improvement.

How to use screen reader: collaborating with designers and developers

Explaining mental models, desired announcements, and navigation expectations guides accessible patterns early.

Structured content saves time for everyone because one good design benefits sighted keyboard users too.

Bug reports with role, name, state, and reproduction steps speed fixes and improve cross-team empathy.

Next steps with how to use screen reader

Fluent screen reader use grows from clear concepts, tuned settings, and consistent practice across Windows, macOS, iOS, and Android.

Structure-first navigation, role and state announcements, and strong keyboard or gesture habits keep tasks efficient and predictable.

Content authored with semantics and respect for standards multiplies the impact of skilled users and reduces friction for everyone.

A simple weekly routine that reviews shortcuts, tests pages, and updates profiles turns learning into lasting independence and productivity.

Inclusive habits at the individual and team level ensure that how to use screen reader remains practical, humane, and future-proof.